Top Reasons to Incorporate CloudRider into Your Workflow

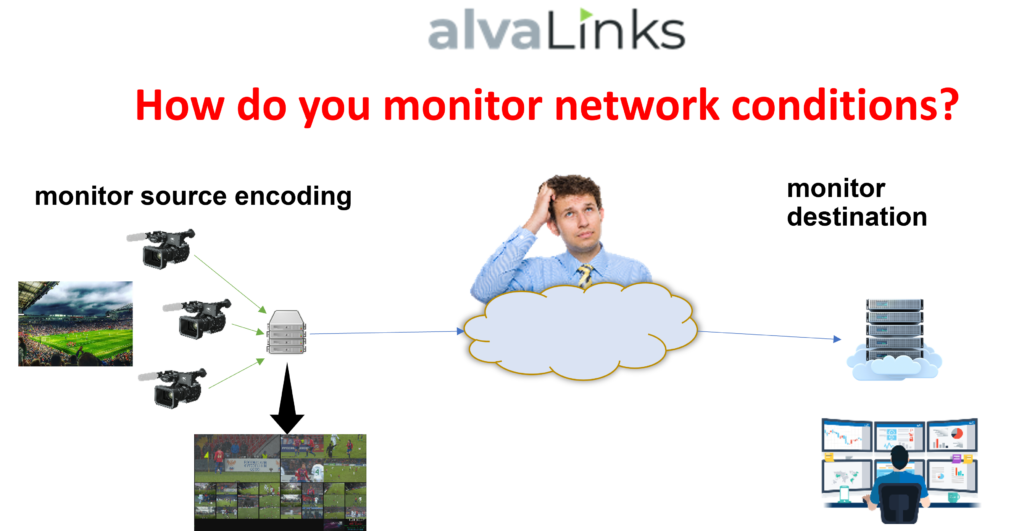

Experiencing video transport delivery issues?

- Do your transport applications suffer from delivery network issues?

- Does your live streaming to the cloud display inconsistently?

- Hearing from the IT Department that the network is fine, yet the video displays black frames?

- The 100Mb Public Internet Service contracted seems appropriate but the stream does not remain locked?

- Available IT assistance can’t determine why the video freezes?

- Would you benefit from a method to correlate Video Impairments to networking issues?

- Do you wish there was a way to increase visibility and transparency across the entire content delivery network, including those segments outside of your responsibility?

If you answered Yes to one or more of these questions continue reading to learn how AlvaLinks can help.

Recent studies and interviews conducted by AlvaLinks, resulted in the following insights:

- Integrators are challenged with a daunting task to evaluate the customer network, and then provide assistance when the delivery is failing. Urgent assessment is needed to identify the impairments and conduct a quick root cause analysis.

- Equipment providers are frustrated that their customers blame network problems on the hardware. Time and resources are engaged to determine if the reported problem is caused by the provided equipment or if the network is the problem.

- Broadcast teams attempt to resolve and avoid problems to the best of their ability, but the business of streaming video cannot just stop and wait for the cause to be revealed. A new set of tools is needed to quickly identify the root cause and implement solutions.

- Transport service providers are left in the dark when the customer’s network is not under the control of the service provider and leaves them with no visibility into problems occurring on the customer’s side.

Many times, AlvaLinks has heard, “The bandwidth is there but the stream is delivered with interruptions” and, “Our video includes macro blocking and freezes, but the IT members report there are NO network problems”.

IT departments are challenged to oversee network availability, continuity of service, and protection against intrusion. Investigating impairments to the delivery of video is diverting them from their primary mission. Any resource that can make their trouble-shooting easier is welcomed. The requirement is to identify the cause of video delivery impairments over a wide range of network configurations.

Why Video is so sensitive?

To understand the sensitivity of video transport and unobstructive live video delivery, we need to understand the types of content delivery in use today: multi-program compressed transport streams, single-program compressed transport streams, and uncompressed single-program delivery streams. All have similar challenges and yet unique requirements.

Multi-program compressed transport delivery over IP is the multiplexing of several individual program streams into a single transport stream. Each individual program stream is made of synchronized video, audio, and data elements, compressed by hardware or software tools, then correlated for uniform decoding and viewing. (I.E., the sync and clocking information that is included allows the decoding playout device to display the resulting program with synchronization between the video, audio, and other related data, such as closed captioning.) One or more of such program streams are split into 188-byte transport packets, then encapsulated into IP packets for delivery over the transport network using UDP or RTP protocols. As the video and audio are compressed, the loss of one packet may have a tremendous ripple effect on the decompression (the decoding) and can affect more than one program within the transport stream. Even more critical is that the IP packets need to be synchronized by the receiving device and played back in the original order. Any network jitter must be carefully accommodated so that packets won’t decode and display too early or too late, and that the receiving cache buffer does not underflow or overflow. Care must be taken to recover lost packets in time, prior to accurate playout and display. A typical compressed transport stream is usually between 2 megabits per second and 200 megabits per second. Typical applications are live video transmission, like news and sporting events and NextGenTV broadcast with delivery methods like CMTS/DOCSIS, ATSC 1.0 and 3.0, Direct-to-Home, and more.

A single program compressed video stream is defined by the individual compression of each video and audio frame; there is no dependency between the different frames, such as the relationship between I, P, and B frames (Intra-coded frames, Predicted-picture frames, and Bi-directional predicted picture frames). The main benefit is reduced complexity and greater robustness at the expense of a higher bit rate compared to regular video compression. The video stream is also delivered by UDP/RTP to the receiver. The main advantage is that when a packet is lost the loss only affects one frame. The recovery method can try to request a resend of the lost packet or to retransmit the entire frame. Common applications are homeland security, video conferencing, WebRTC (including Microsoft Teams, Zoom, Google Meet) and many others. Professional production applies JPEG-XS with bitrates that range from 125 megabits per second to 1.25 gigabits per second with less than one frame of delay. The basic receiver functionality remains consistent with a buffer to accommodate jitter, lost/corrupted packet replacement and reordering, and to reconstruct the original capture rate for playout.

In recent years reliable IP-transport delivery solutions were introduced by Zixi, Haivision, AWS MediaConnect and others. Today many workflows include the use of formal protocol solutions like SRT, Zixi or RIST. These are excellent solutions to compensate for network impairments but the user belief that the protected transport protocols will overcome any network challenge is not completely true. The user must still understand the applied network conditions to refine the configuration of the protected delivery protocol. This includes defining an accurate latency value, the re-request limitations for ARQ (missing packet re-request), the maximum bitrate not to exceed, and configuration of backup or redundant delivery paths with seamless switching. Most users don’t know what to specify or if the defined configuration is strong enough to overcome network impairments. Additionally, the user should be aware of the overall network behavior over time, as the conditions can always change. The transport configuration applied must be able to compensate for these changes in network conditions and performance.

The uncompressed single program stream has become prevalent in the last few years. Raw video and audio data are encapsulated into IP packets and sent over the content delivery IP network. The delivery of uncompressed video is like the previous two methods, but this type of data cannot sustain the loss of packets and jitter must be strictly controlled. To achieve this goal, two identical copies of the stream are sent in parallel on different paths with a minimal, pre-defined delay. The data cannot be buffered beyond a few packets while remaining under one frame of delay. The receiver is responsible for gathering packets from both paths resulting in a single output stream. Any packet loss on one path is recovered from the duplicate packet from the other path. The main challenge is to maintain the same latency and jitter values on both routes and identify cases where they might diverge. The uncompressed video stream also includes a very controlled timing mechanism to maintain the necessary synchronization. A typical bitrate for such streams is 1.5 to 12 gigabits per second, per program stream, with the duplicate program stream being delivered across a diverse path. Consideration must be made for the audio and video data being encrypted, leaving exposed only the basic IP header parameters.

The AlvaLinks CloudRider solution is designed with these challenges in mind. The AlvaLinks solution is a technique designed to incorporate ‘agents’ that are deployed in various locations on the path of the video streams (primarily the source and destination). The solution also includes cloud-based servers, specifically a control server, a database, and a web-based user interface server.

AlvaLinks CloudRider Agents perform the following tasks:

- Monitor local traffic and IP flow statistics.

- Examine and compare bidirectionally the paths available between agents.

- Transmit and analyze test streams between agents to examine path behavior.

- Record the resulting data within a common, time-based repository database for further analysis.

The control server is responsible for managing the agent’s behavior and running applications.

Each agent transmits the collected information to a common database for fast analysis.

The database collects the statistics from each agent for evaluation and presentation to the user through a web UI, whose server pulls the statistics from the controller and creates the information visualization for the user.

The AlvaLinks innovation lies in proactive testing and evaluation of every available path between the AlvaLinks Agents. Each path route is evaluated to detect hop changes, such as route changes on the Internet, influenced by BGP (Border Gateway Protocol) or within the cloud, and to detect network impairments. Unlike earlier methods that gathered statistical information based upon the transmit &/or receive bandwidth or for each individual flow, AlvaLinks innovation draws detailed information from the proactively generated test stream.

The AlvaLinks agent performs the following tasks:

Route detection & monitoring

Many new deployments use the concept of multiple paths to implement redundant delivery or dynamically switchable paths. (Similar to what is currently being promoted by MultiPath TCP). A responsibility within the AlvaLinks application is to detect and monitor each available path. Some paths may be initiated outside of the scope of influence provided by the source or destination location organizations. For example, a load balancer on the outskirts of a source organization may be using three different ISPs to provide high quality of service, while delivering single or multiple inputs at the destination. By applying AlvaLinks route detection, the AlvaLinks Agent can engage, analyze, and comprehensively examine and test each path. The detection process results in a monitoring procedure where each path (individual segments along the overall route) is evaluated by applying principal techniques, such as traceroute. A difference is that by applying the UDP protocol, comparing the different ports for detection of NAT (Network Address Translation), and the influence of network load balancers and reroute changes on the path, the results are precise, dynamic, and revealing. Each route is tested every 100ms for high accuracy.

Path analysis

AlvaLinks Agents generate a test stream that is in the size of a typical video stream (1360 bytes), including individual packet timestamps, sequential indexes, and information about the source Agent. The data is sent at a constant rate to the destination Agent across all detected routes between the origination Agent and the destination Agent. The receiving Agent inspects the test stream to calculate bitrate, packet loss, jitter, latency, and TTL/hop changes. (Time To Live packet expiration duration between segments.) These parameters are specific to the video stream being supervised but can also influence other streams along that same path. The user can correlate events on a specific flow from problems identified by the test stream. Furthermore, both the sending and receiving Agents can compile the resulting test information within a common database allowing an informative web user interface presentation consolidated as a visual comparison of the test stream performance. No other solution exists to detect packet-by-packet jitter deviation, comparing the source and revealing accurate calculation of latency changes. Currently, the only methods available are labor-intensive and compare offline packet captures.

Path testing

The AlvaLinks CloudRider Agent also can apply the internally generated test streams to perform a step-by-step bit rate evaluation of one or more routes, evaluating the performance limitations of each, before and during live transmission. The AlvaLinks application performs step-by-step bit rate increases to the test stream and evaluates the resulting impact by registering the differences at the receiver in packet latency, packet jitter, packet loss, and router hop changes. The test can be done in one or both directions of the route. This testing can be conducted simultaneously while other streams are traversing the same route. This innovation incorporates a stop logic to signal the sending Agent to halt testing if there is an impact to the content stream. Alternatives, such as ‘SpeedTest’ or ‘Iperf’, cannot match this behavior as they are destructive in nature and without consideration of other services on the same route. Applying this technique allows for true route bandwidth evaluation and the ability to understand its true potential or limitations. No solution can provide this capability today.

Flow monitoring

Another functionality of the AlvaLinks CloudRider Agent that is similar to networking techniques like sFlow (the OSI Layer2 packet extraction standard by InMon Corp, short for ‘sampled flow) and IPFIX (an IETF protocol named Internet Protocol Flow Information Export) is the registration of every detected IP flow traversing the Agent. The Application captures any new IP flows and gathers the transmit and receive statistics. A unique capability is the identification of a flow across a route between two agents. This is a difficult task to achieve with the flow monitoring solutions presently available as the IP flow passes through Network Adaptation Translations, which rewrite the source IP address and port. The AlvaLinks application tracks each IP flow, the statistics; bitrate, jitter, and route variation, to provide insightful information.

Making some sense: Buffer emulation

AlvaLinks consolidates the accumulated information and transfers the resulting data into the CloudRider processing logic to produce a recovery buffer emulation value. Developed from the many years of AlvaLinks technical team experience within the industry that established ARQ-based reliable video delivery transport, a dependable buffer value is produced that should overcome any network-impairing conditions that are collected by AlvaLinks probing agents. The value is calculated and presented for each individual second.

What is the benefit and how can this be applied to your valuable applications? Broadcast engineers can use the resulting value to set the SRT/Zixi/RIST buffer. Operation teams can evaluate their current configuration against this value and determine if the value is greater than the present transport application configuration. For example, Amazon Web Services MediaConnect product users and customers will now be able to easily evaluate if the resulting streaming service will be inconsistent or stable over time.

In future AlvaLinks will incorporate an AI analytics server to detect trends and provide guidance to the end user.

How can the AlvaLinks CloudRider solution be incorporated within your workflow?

AlvaLinks provides Docker deployable agents with cloud-based, data gathering servers and a web interface to assist the user with identifying delivery issues. The Dockers may be installed as a sub-application within user equipment or as an external TAP kernel device. For cloud installations the AlvaLinks agent can observe stream traffic by applying AWS traffic port mirroring for a fixed usage cost, without being related to the volume of traffic.

Want to learn more? Contact us at [email protected]